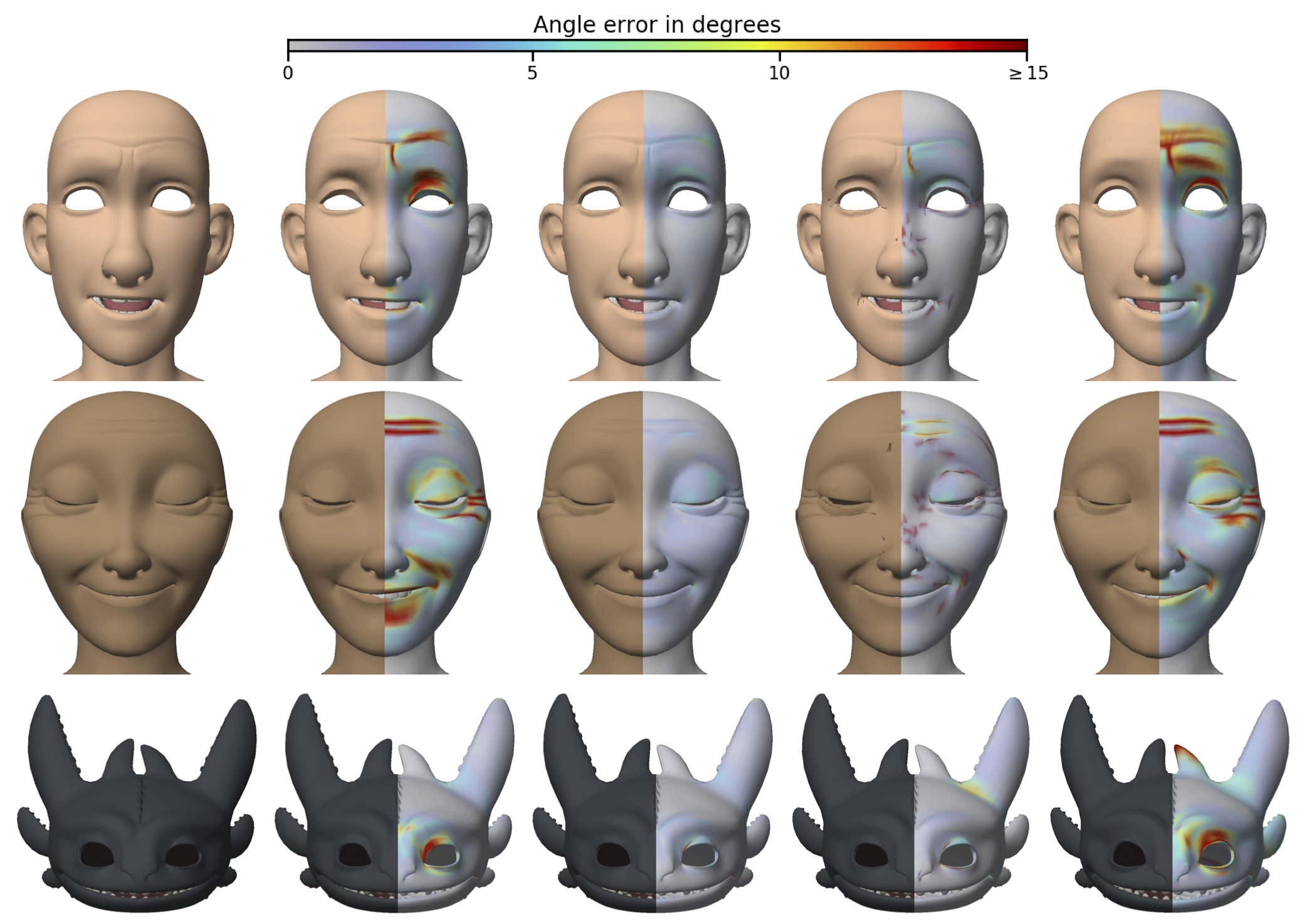

View-Dependent Adaptive Cloth Simulation with Buckling Compensation

Abstract

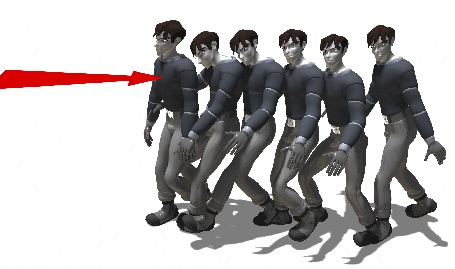

This paper describes a method for view-dependent cloth simulation using dynamically adaptive mesh refinement and coarsening. Given a prescribed camera motion, the method adjusts the criteria controlling refinement to account for visibility and apparent size in the camera's view. Objectionable dynamic artifacts are avoided by anticipative refinement and smoothed coarsening, while locking in extremely coarsened regions is inhibited by modifying the material model to compensate for unresolved sub-element buckling. This approach preserves the appearance of detailed cloth throughout the animation while avoiding the wasted effort of simulating details that would not be discernible to the viewer. The computational savings realized by this method increase as scene complexity grows. The approach produces a 2x speed-up for a single character and more than 4x for a small group as compared to view-independent adaptive simulations, and respectively 5x and 9x speed-ups as compared to non-adaptive simulations.

Citation

Woojong Koh, Rahul Narain, and James F. O'Brien. "View-Dependent Adaptive Cloth Simulation with Buckling Compensation". IEEE Transactions on Visualization and Computer Graphics, 21(10):1138–1145, October 2015.

Supplemental Material