Fast and Deep Facial Deformations

Abstract

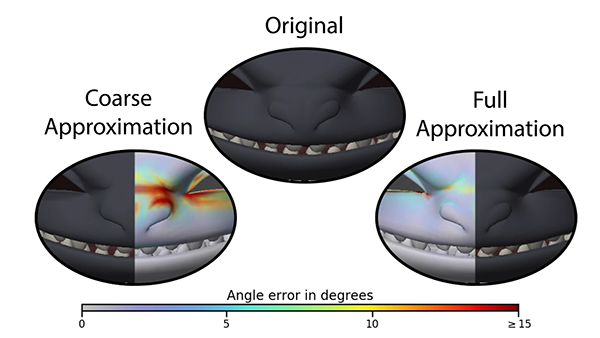

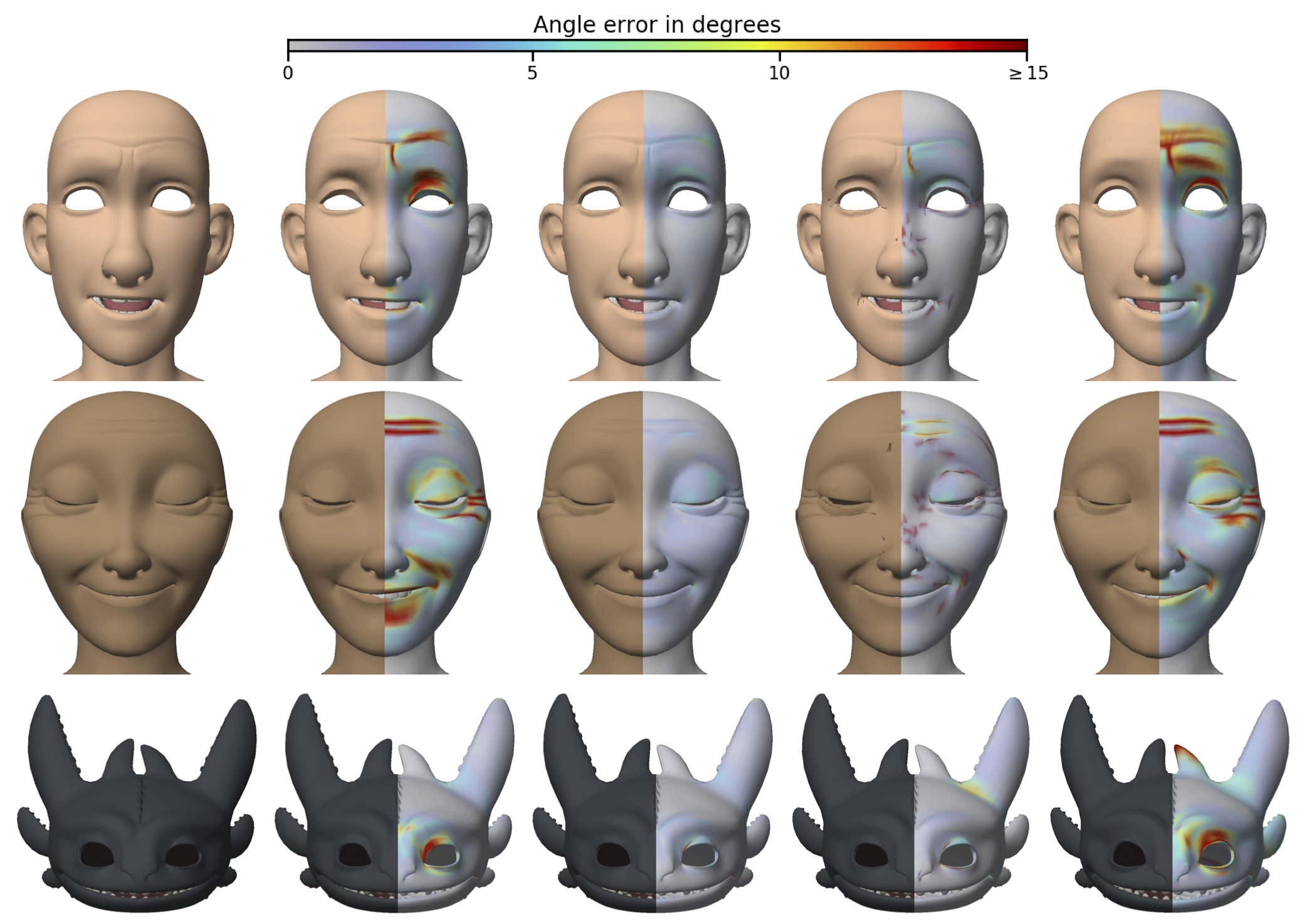

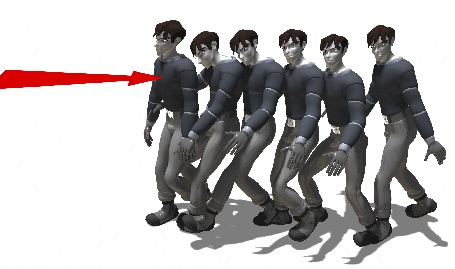

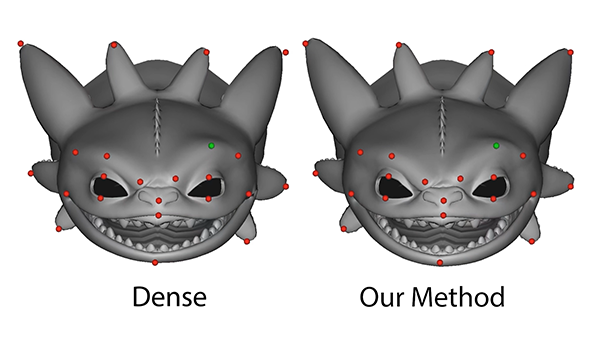

Film-quality characters typically display highly complex and expressive facial deformation. The underlying rigs used to animate the deformations of a character’s face are often computationally expensive, requiring high-end hardware to deform the mesh at interactive rates. In this paper, we present a method using convolutional neural networks for approximating the mesh deformations of characters’ faces. For the models we tested, our approximation runs up to 17 times faster than the original facial rig while still maintaining a high level of fidelity to the original rig. We also propose an extension to the approximation for handling high-frequency deformations such as fine skin wrinkles. While the implementation of the original animation rig depends on an extensive set of proprietary libraries making it difficult to install outside of an in-house development environment, our fast approximation relies on the widely available and easily deployed TensorFlow libraries. In addition to allowing high frame rate evaluation on modest hardware and in a wide range of computing environments, the large speed increase also enables interactive inverse kinematics on the animation rig. We demonstrate our approach and its applicability through interactive character posing and real-time facial performance capture.

Citation

Stephen W. Bailey, Dalton Omens, Paul Dilorenzo, and James F. O'Brien. "Fast and Deep Facial Deformations". ACM Transactions on Graphics, 39(4):94:1–15, August 2020. Presented at SIGGRAPH 2020, Washington D.C.

Supplemental Material

Demonstration Video (Highres Download)