Motion Synthesis from Anotations

Abstract

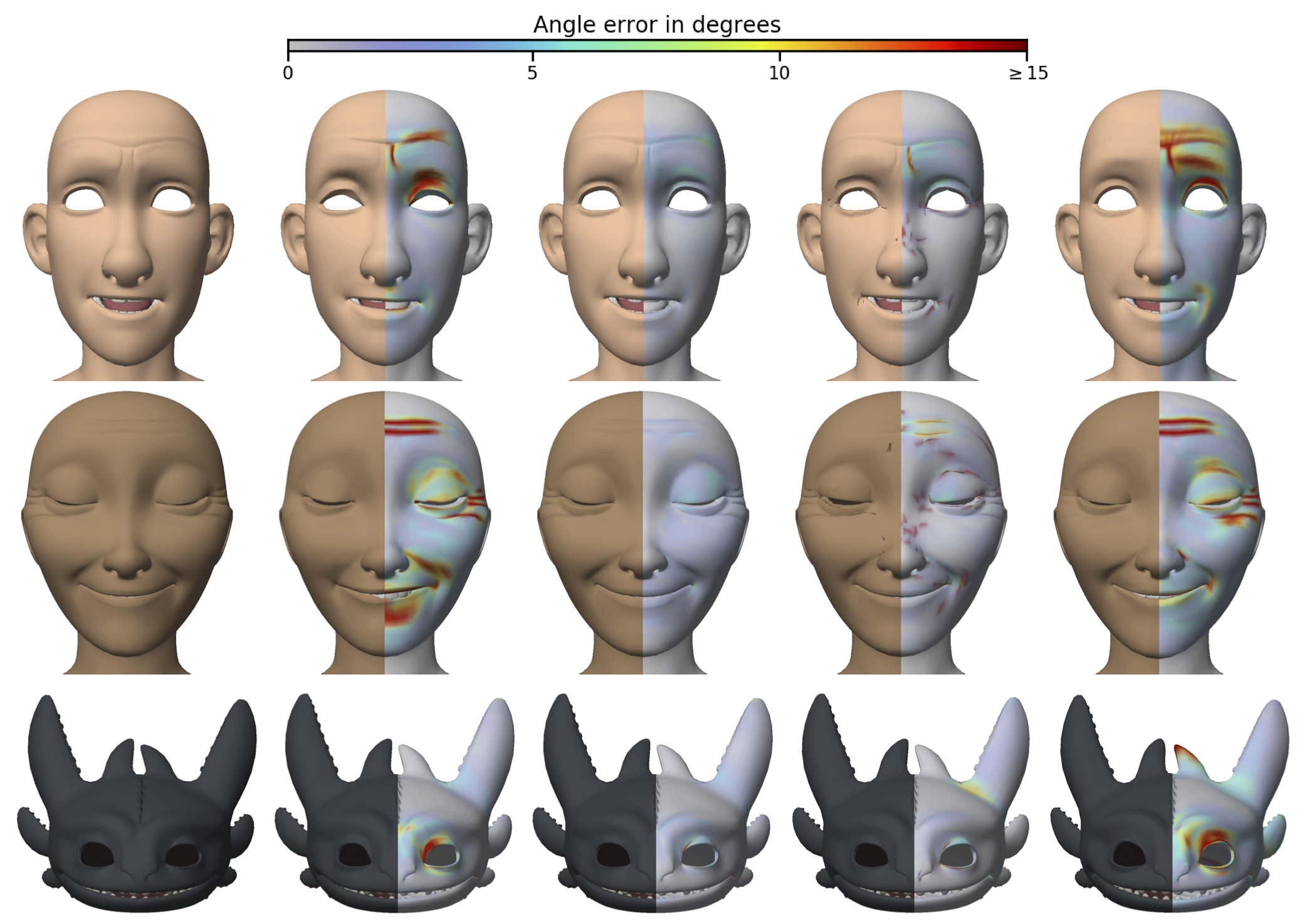

This paper describes a framework that allows a user to synthesize human motion while retaining control of its qualitative properties. The user paints a timeline with annotations --- like walk, run or jump --- from a vocabulary which is freely chosen by the user. The system then assembles frames from a motion database so that the final motion performs the specified actions at specified times. The motion can also be forced to pass through particular configurations at particular times, and to go to a particular position and orientation. Annotations can be painted positively (for example, must run), negatively (for example, may not run backwards) or as a don't-care. The system uses a novel search method, based around dynamic programming at several scales, to obtain a solution efficiently so that authoring is interactive. Our results demonstrate that the method can generate smooth, natural-looking motion. The annotation vocabulary can be chosen to fit the application, and allows specification of composite motions (run and jump} simultaneously, for example). The process requires a collection of motion data that has been annotated with the chosen vocabulary. This paper also describes an effective tool, based around repeated use of support vector machines, that allows a user to annotate a large collection of motions quickly and easily so that they may be used with the synthesis algorithm.

Citation

Okan Arikan, David A. Forsyth, and James F. O'Brien. "Motion Synthesis from Anotations". In Proceedings of ACM SIGGRAPH 2003, pages 402–408, August 2003.

Supplemental Material

Full Paper Video

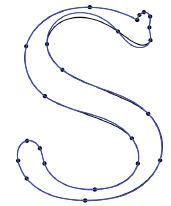

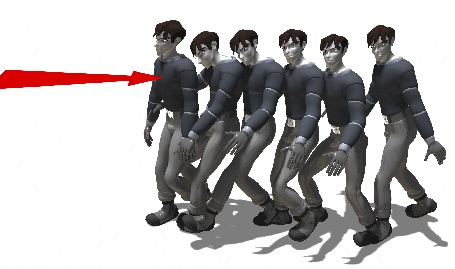

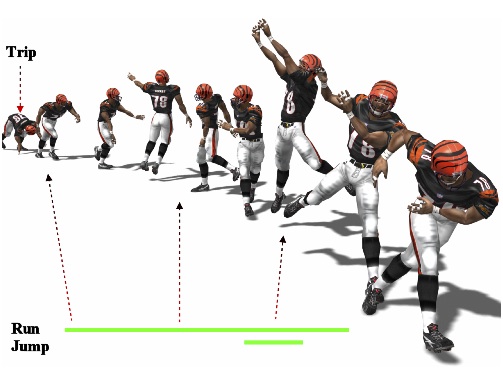

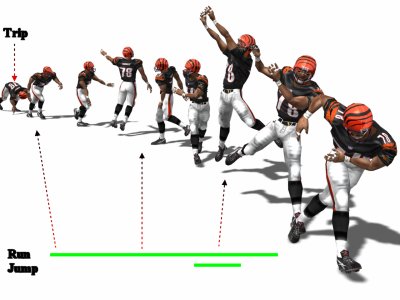

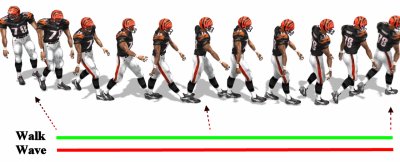

Below are some examples of the constraints that we can put on the motion. A motion that looks human and satisfies these constraints are synthesized automatically by our system.

Here, we synthesize a motion that starts from a "keyframe" (labeled as the trip) and runs and then jumps while running.

We can constrain the synthesized motion not to perform certain actions. Here, we synthesize a motion that walks but not waves (indicated by the red constraint).

When we turn the "do not vave" constraint into a "wave" constraint (from red to green), we get the motion on the left.

Constraints can be manipulated on the timeline. For example, we can get the motion on the left when we ask for a motion that walks but only waves for the second half of the motion.

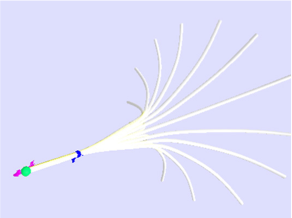

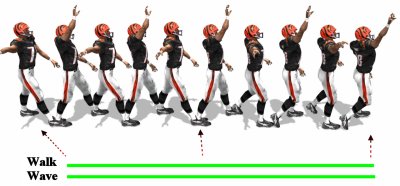

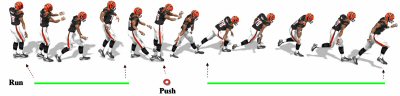

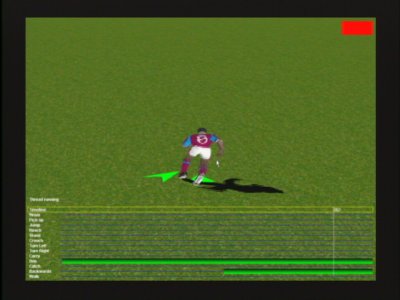

The search can also take positional constraints into account while synthesizing motions for given annotations. Here, the figure is constrained to be running forward and then running backwards. We enforce position constraints indicated as green arrows. For clarity, the running forwards section of the motion is shown on top while running backwards is shown on the bottom.

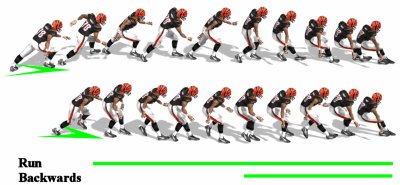

Here the motion is constrained to interpolate the "push" frame while running before and after the constraint.

In addition to matching the annotations, a specific frame or motion can be forced to be used at a specific time. Above, the person is forced to pass through a pushing frame in the middle of the motion while running before and after the pushing.

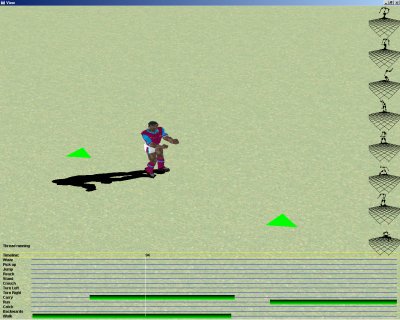

The user interface allows the user to see each available annotation label (bottom of the screen), and paint positive annotations (green bars) and negative annotations (blue bars). The frames that are not painted are interpreted as don't care. The user can manipulate geometric constraints directly using the green triangles and place frame constraints on the timeline by choosing motion to be performed (right of the screen).

This video demonstrates the auto-annotation process. The video is recorded after 10 motions have been annotated by hand (less than 10 percent of the dataset). The remaining motions in the dataset are annotated using SVM. The user then simply corrects the SVM results and the SVMs for each annotations are re-trained.

The user creates a motion the crouches then jumps then runs.

The user asks for a running motion, but the synthesized motion runs backwards. Even though the synthesized motion satisfies the constraint, the user may not want a backwards motion. Here we prohibit backwards motion by putting a negative annotation.

In this video, we demonstrate the use of position constraints to force the synthesized motion to go to a particular position and orientation.

During the synthesis, a particular frame or motion can be force to happen at a specified time. In this video, the user inserts a "tripping" frame to force the synthesized motion to trip.