Reflectance Sharing: Image-based Rendering from a Sparse Set of Images

Abstract

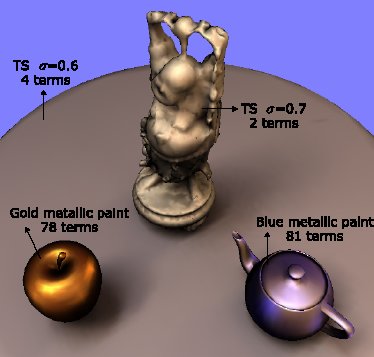

When the shape of an object is known, its appearance is determined by the spatially-varying reflectance function defined on its surface. Image-based rendering methods that use geometry seek to estimate this function from image data. Most existing methods recover a unique angular reflectance function (e.g., BRDF) at each surface point and provide reflectance estimates with high spatial resolution. Their angular accuracy is limited by the number of available images, and as a result, most of these methods focus on capturing parametric or low-frequency angular reflectance effects, or allowing only one of lighting or viewpoint variation. We present an alternative approach that enables an increase in the angular accuracy of a spatially-varying reflectance function in exchange for a decrease in spatial resolution. By framing the problem as scattered-data interpolation in a mixed spatial and angular domain, reflectance information is shared across the surface, exploiting the high spatial resolution that images provide to fill the holes between sparsely observed view and lighting directions. Since the BRDF typically varies slowly from point to point over much of an object's surface, this method enables image-based rendering from a sparse set of images without assuming a parametric reflectance model. In fact, the method can even be applied in the limiting case of a single input image.

Citation

Todd Zickler, Ravi Ramamoorthi, Sebastian Enrique, and Peter N. Belhumeur. "Reflectance Sharing: Image-based Rendering from a Sparse Set of Images". In Eurographics Symposium on Rendering 2005, volume 28, Aug 2005.